NOTE

- Once you have determined that the policy deny is significantly high or is higher than usual, the next step is to Identify the source of this Traffic.

Note: In most cases, it is same 6 tuples UDP syslog traffic, that causes the issue.

UDP syslog traffic is usually high volume and uses the same source-port (socket).

How to identify the offender

- Run "show running resource-monitor ingress-backlogs", find high usage on flow_slowpath.this is an evidence of the issue. but unfortunately clue of IP/tupple is not shown for this slowpath issue.

- Find related Deny traffic log . Catch-all policy may have numerous deny log for the specific tupple

- As all tupple is shown in the log, this is an ideal way to know a nature of traffic. (can identify/assume source/dst/application, etc)

- If not seen, make sure catch-all / other deny policies are logged

- Policy hit count for each policies can be a clue to determine which policy is denying the packets. The policy may need to enable deny traffic log to capture the tupple

- Run "show session packet-buffer-protection". it shows packet buffer protection detecting information that can show source/dst IP

- In threat log, filter PBP events, it show a clue of source IP or souce and destIP,too. (search with "PBP" in threat log filter)

- Drop stage pcap or external pcap

- You can enable drop stage pcap with packet-count option (with a specific tupple filter at your best). packet-count option helps to disable pcap automatically after capturing a specified number of packets. (all other drop packets are included. but you should be able to assume which is the abuser as the rate of drop is high)

- External pcap in customer network is also helpful

- ACC is not useful to identify the attacker as deny traffic log(packet/byte count=0) is not included in the report.

Mitigation

In customer network side,

1. Once the source of the denied traffic is identified, check if it is feasible to stop this traffic at source or closer to source.

i.e.

- Stop the traffic from the source

- Example: If there is a device that is flooding syslog messages to a particular destination, you can remove the syslog server destination from that device to stop the flood.

- Discard the traffic with ACL on a network device before reaching the NGFW

- Discard unnecessary traffic with static route to null interface

- In one of typical cases, in case customer's core switch pointing NGFW (i.e. when segregating trust/untrust zone) for default route, for packets can't be resolvable in core switch's routing table can be forwarded to the NGFW. Eliminating such unnecessary traffic reaching NGFW could be ideal solution.

On NGFW,

2. Allowing traffic with security policies

- Before moving into mitigation techniques, we should make sure the traffic causing the issue is supposed to be denied or allowed.

- If the traffic needs to be allowed, create the required security policy. Once the traffic is allowed, a session would be installed and the traffic is no longer subjected to slowpath.

- If an impacting traffic pattern is not known yet, a security policy can be created to allow high volume traffic like syslog from internal/trusted zones(apply security profiles as required) for a try, to see packet descriptor usage goes down or not.

3. Protection against known offenders (DoS policy deny)

- If exact IPs of the hosts causing the issue are known, creating a DoS policy with the action “Deny” will help.

- Try DoS policy rules as specific as possible (source/destination zone, IPs, service port)

- Since DoS policies are evaluated before security policy lookup and do not have large number of entries, packets are blocked earlier thereby greatly saving firewall resources.

- This step is helpful to make sure the specified tupple(s) in DoS policy is really the abuser as an interim step. Once the traffic pattern is confirmed, next step (DoS policy protect) can be considered to apply with more tuned configuration

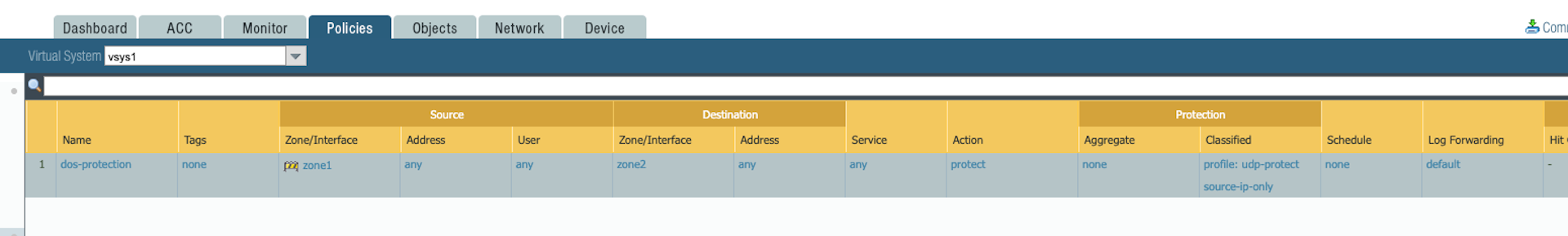

4. Protection against unknown offenders (DoS policy protect)

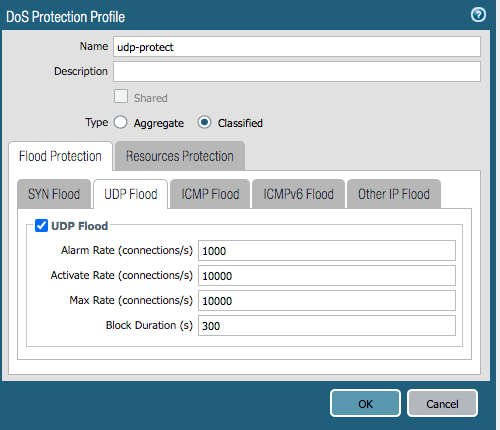

- Creating a DoS classified policy with the action “Protect”

- A classified DoS policy can be applied with action “Protect” and address matched to “source-ip only” or “src-dest-ip-both”.

***Note: Configured threshold values are only examples, these need to be tweaked based on customer environment

- Once the configured threshold is reached, the DoS policy would create a DoS ip-block-table, which would start dropping packets without being subjected to slowpath.

- In devices that have an offload processor, the block table would be installed in the offload hardware to further reduce the load on the DP CPU.

- For further reading refer : Monitor Block List and DoS Protection Profiles and Policy Rules

Caveat:

- Thresholds for the classified DoS object would change based on customer traffic pattern and network, the default values may not be applicable to all environments.

- For slowpath deny attacks only “source-ip only” or “src-dest-ip-both” would work, using "destination-ip only" does not help.

- For internet facing zones, since source ips could be potentially huge, the firewall doesn’t have the capacity to store counters for every possible IP address on the internet.

- Refer: Classified vs Aggregate DoS profiles

5. Packet Buffer Protection (PBP)

- Packet Buffer Protection (PBP) is a feature available starting with PAN-OS 8.0.

- PBP is preferred, as it is automatic and is triggered based on actual resource utilization, when compared to DoS policy which is triggered on pre-configured connections per second threshold

- PBP protects the firewall both from slowpath and fastpath (existing session) buffer depletion.

- Firewall monitors buffer abusers automatically.

- After reaching the configured activate threshold (default 50%), the firewall starts dropping offending traffic (RED).

- If buffer utilization is above 80% ( this threshold is internally hardcoded and not configurable) for a duration of block hold time a dos block table entry is created.

- Refer: Packet Buffer Protection

In this specific case of slowpath deny usually a combination of PBP + DoS classified policy with the action “Protect” provides better results.

Monitoring:

SNMP can be leveraged to monitor buffer utilization among other things. DP resources are part of HOST-RESOURCES-MIB. More information can be found here:

SNMP for Monitoring Palo Alto Networks Devices

snmp-mibs

List of useful OIDs:

1. Description - .1.3.6.1.2.1.25.2.3.1.3.xxxx

Example:

.1.3.6.1.2.1.25.2.3.1.3.1011 = STRING: "Slot-1 Data Processor-0 Hardware Packet Buffers"

.1.3.6.1.2.1.25.2.3.1.3.1111 = STRING: "Slot-1 Data Processor-1 Hardware Packet Buffers"

2. Hardware Packet Buffer pool size - .1.3.6.1.2.1.25.2.3.1.5.xxxx

Example:

.1.3.6.1.2.1.25.2.3.1.5.1011 = INTEGER: 17203

.1.3.6.1.2.1.25.2.3.1.5.1111 = INTEGER: 17203

3. Current Buffer Utilization - .1.3.6.1.2.1.25.2.3.1.6.xxxx

Example:

.1.3.6.1.2.1.25.2.3.1.6.1011 = INTEGER: 122

.1.3.6.1.2.1.25.2.3.1.6.1111 = INTEGER: 128

DoS related counters via SNMP (part of PAN-COMMON-MIB):

| MIB Identity | Counter | Description | OID |

| panFlowPolicyDeny | flow_policy_deny | Session setup: denied by policy | .1.3.6.1.4.1.25461.2.1.2.1.19.8.10 |

| panFlowDosBlkNumEntries | flow_dos_blk_num_entries | Number of entries in DOS block table | .1.3.6.1.4.1.25461.2.1.2.1.19.8.2

|

| panFlowDosBlkSwEntries | flow_dos_blk_sw_entries | Number of entries in DOS Software block table | .1.3.6.1.4.1.25461.2.1.2.1.19.8.33 |

| panFlowDosBlkHwEntries | flow_dos_blk_hw_entries | Number of entries in DOS Hardware block table | .1.3.6.1.4.1.25461.2.1.2.1.19.8.34 |

| panFlowDosDropIpBlocked | flow_dos_drop_ip_blocked | Packets dropped: Flagged for blocking and under block duration by DoS or other modules | .1.3.6.1.4.1.25461.2.1.2.1.19.8.13 |

| panFlowDosRuleDrop | flow_dos_rule_drop | Packets dropped: Rate limited or IP blocked | .1.3.6.1.4.1.25461.2.1.2.1.19.8.23 |